Multimodal Neurons in

Artificial Neural Networks

Gabriel Goh, Nick Cammarata †, Chelsea Voss †, Shan Carter, Michael Petrov, Ludwig Schubert, Alec Radford, Chris Olah

Outline

- Motivation & Results

- Background

- Feature Visualization

- A primer on CLIP

- Multimodal Neurons

- Faceted visualization

- Person Neurons

- Region Neurons

- Typographic Attacks

- Conclusion

Motivation & results

Motivation & results

Motivation & results

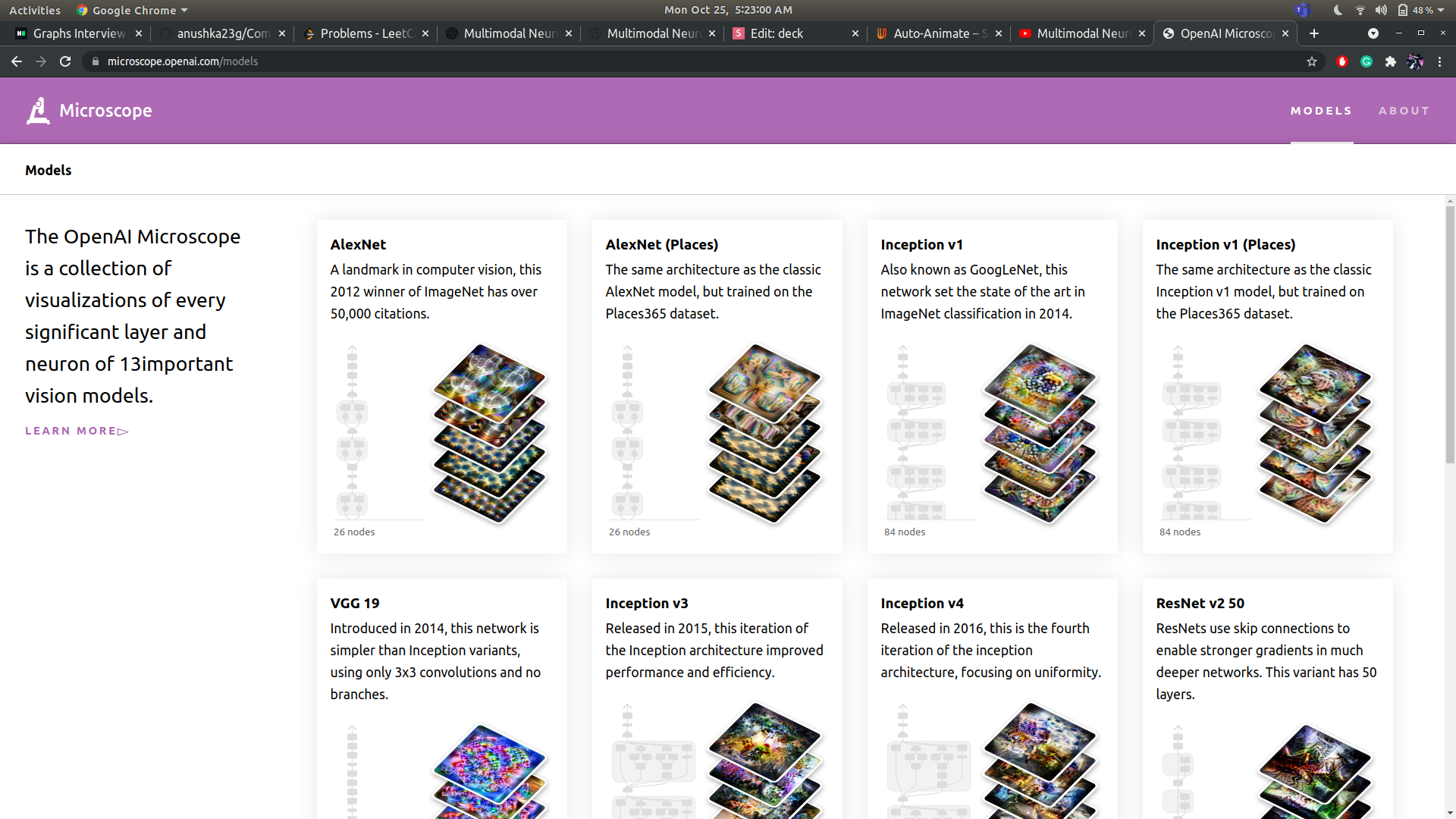

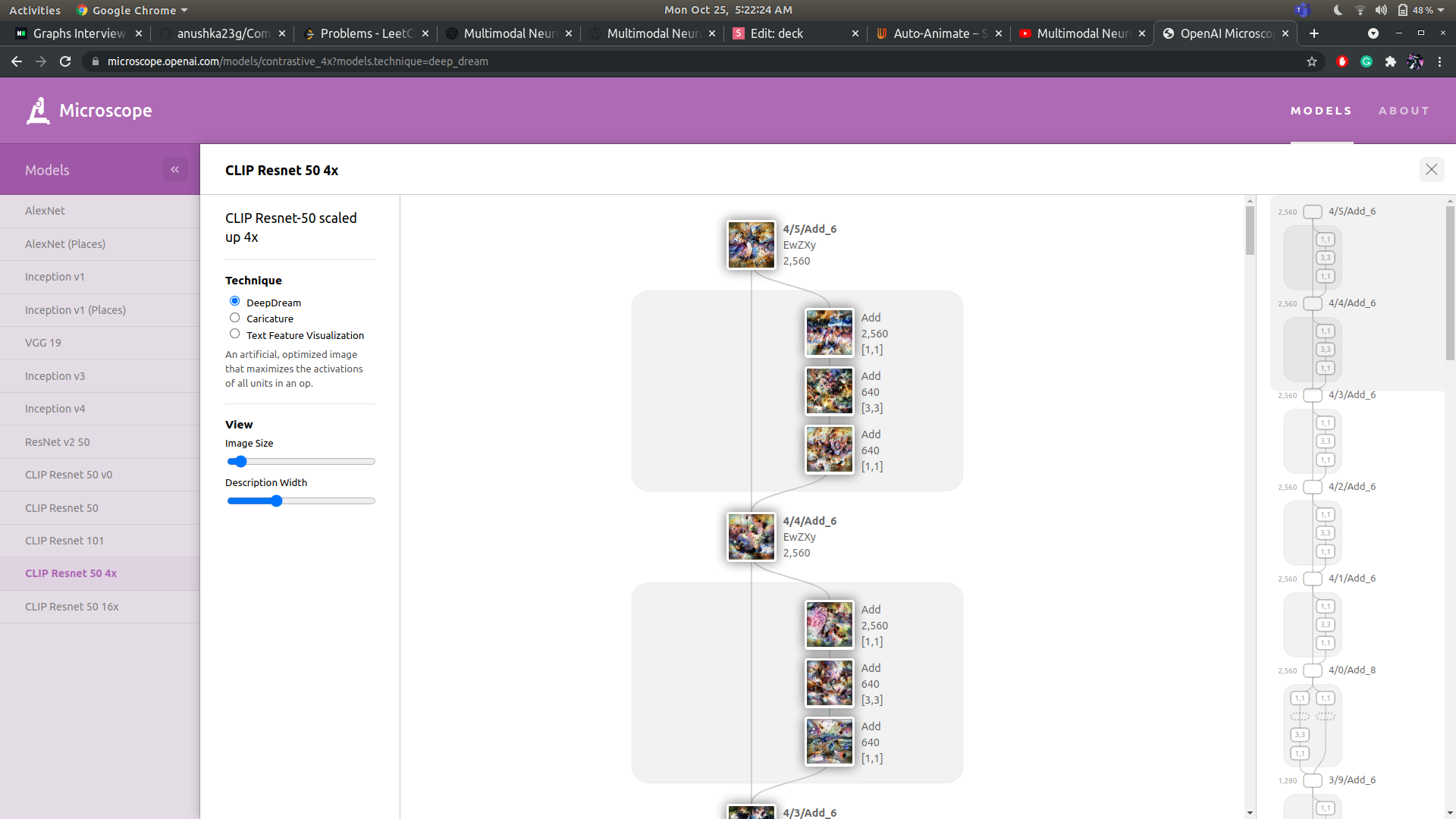

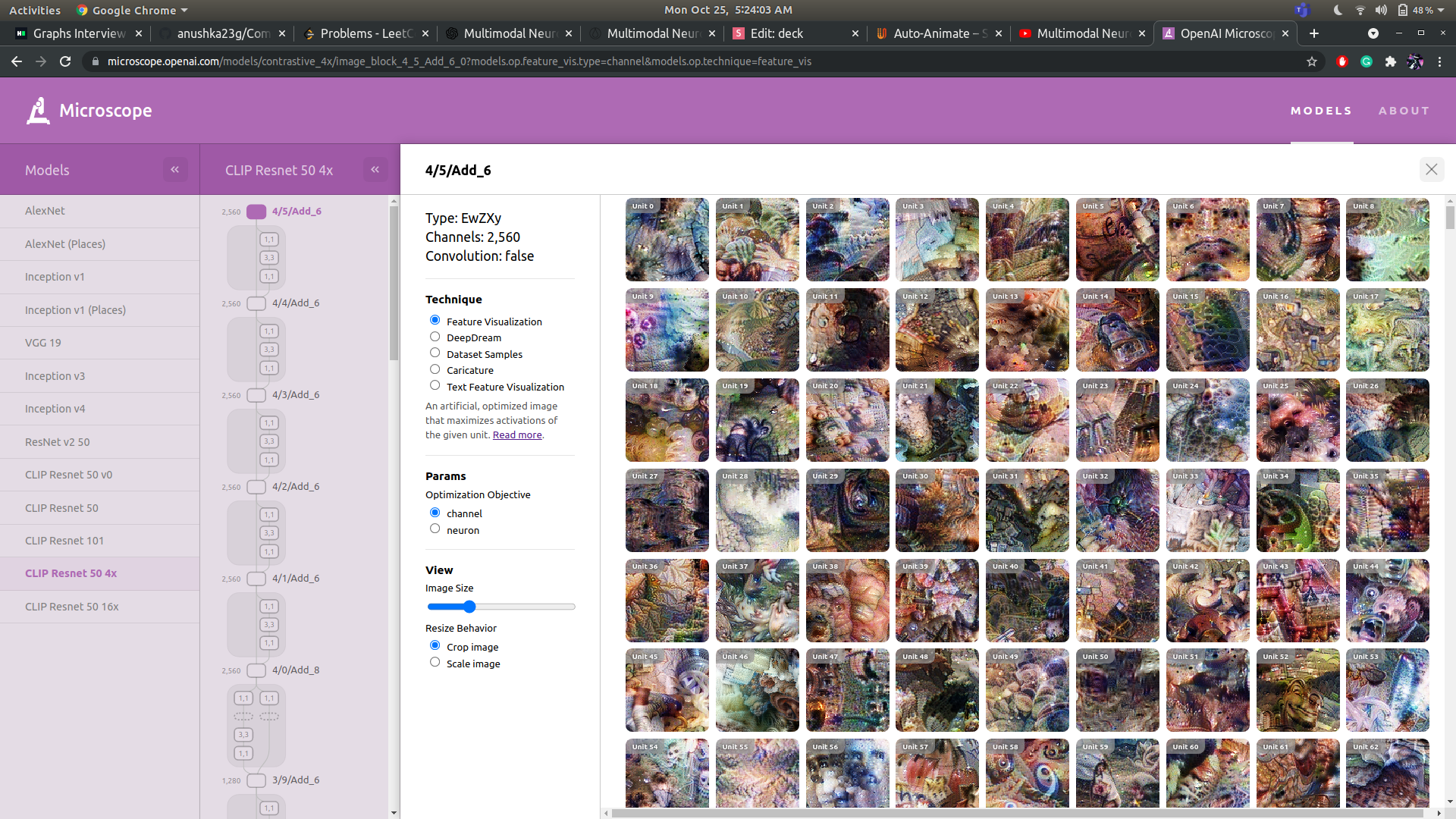

microscope

microscope

microscope

Outline

- Motivation & Results

-

Background

- Feature Visualization

- A primer on CLIP

- Multimodal Neurons

- Faceted visualization

- Person Neurons

- Region Neurons

- Typographic Attacks

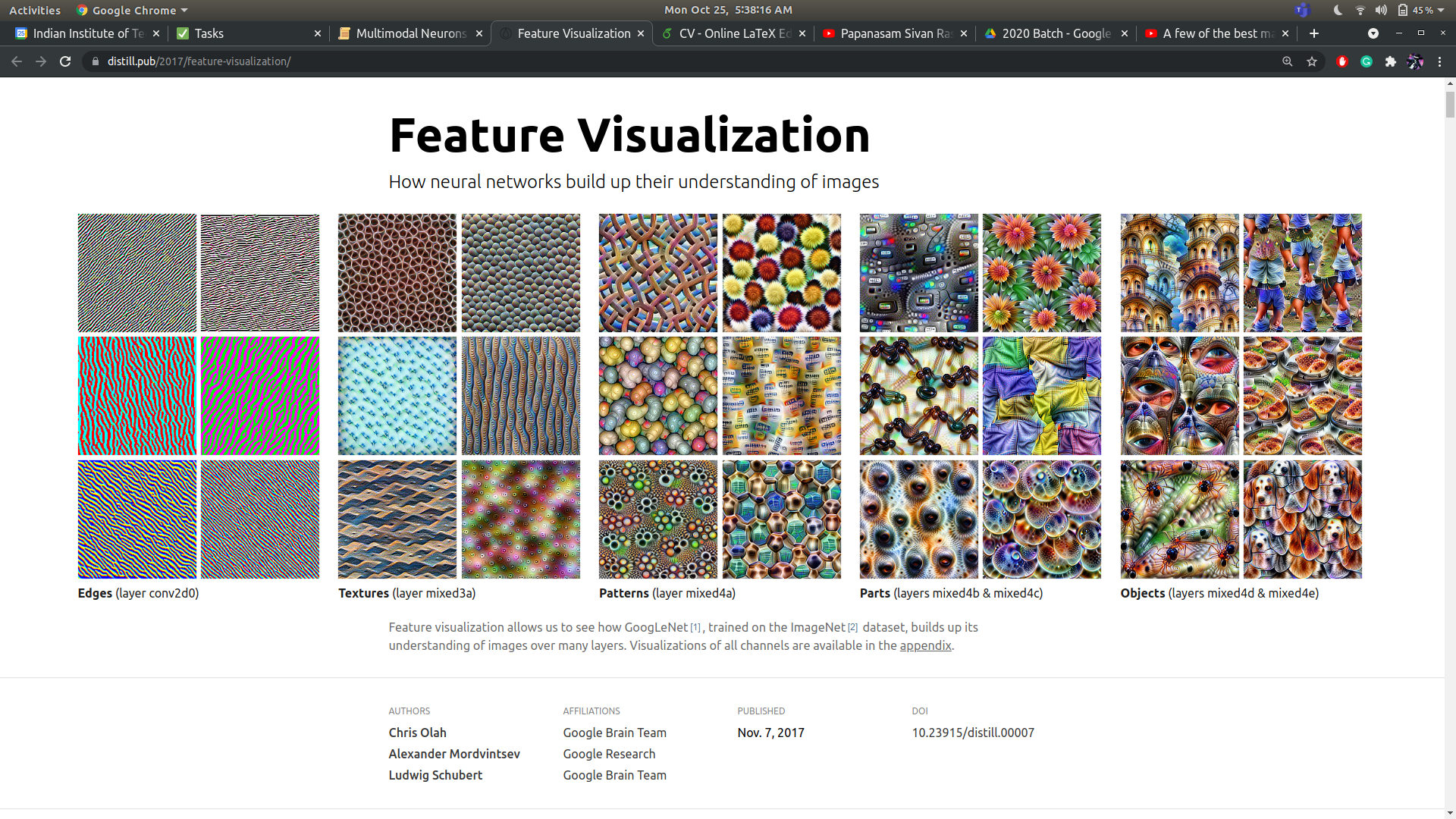

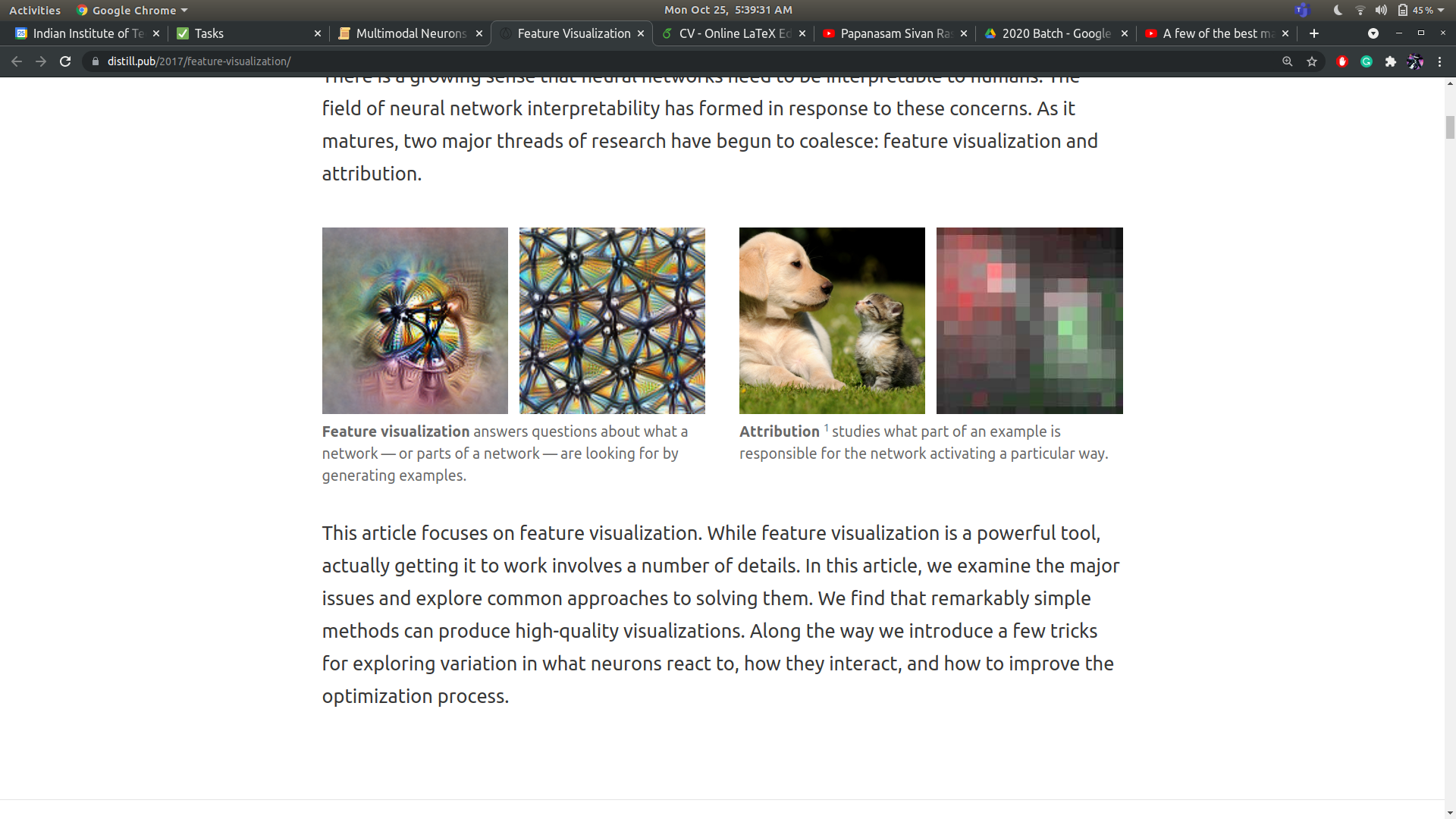

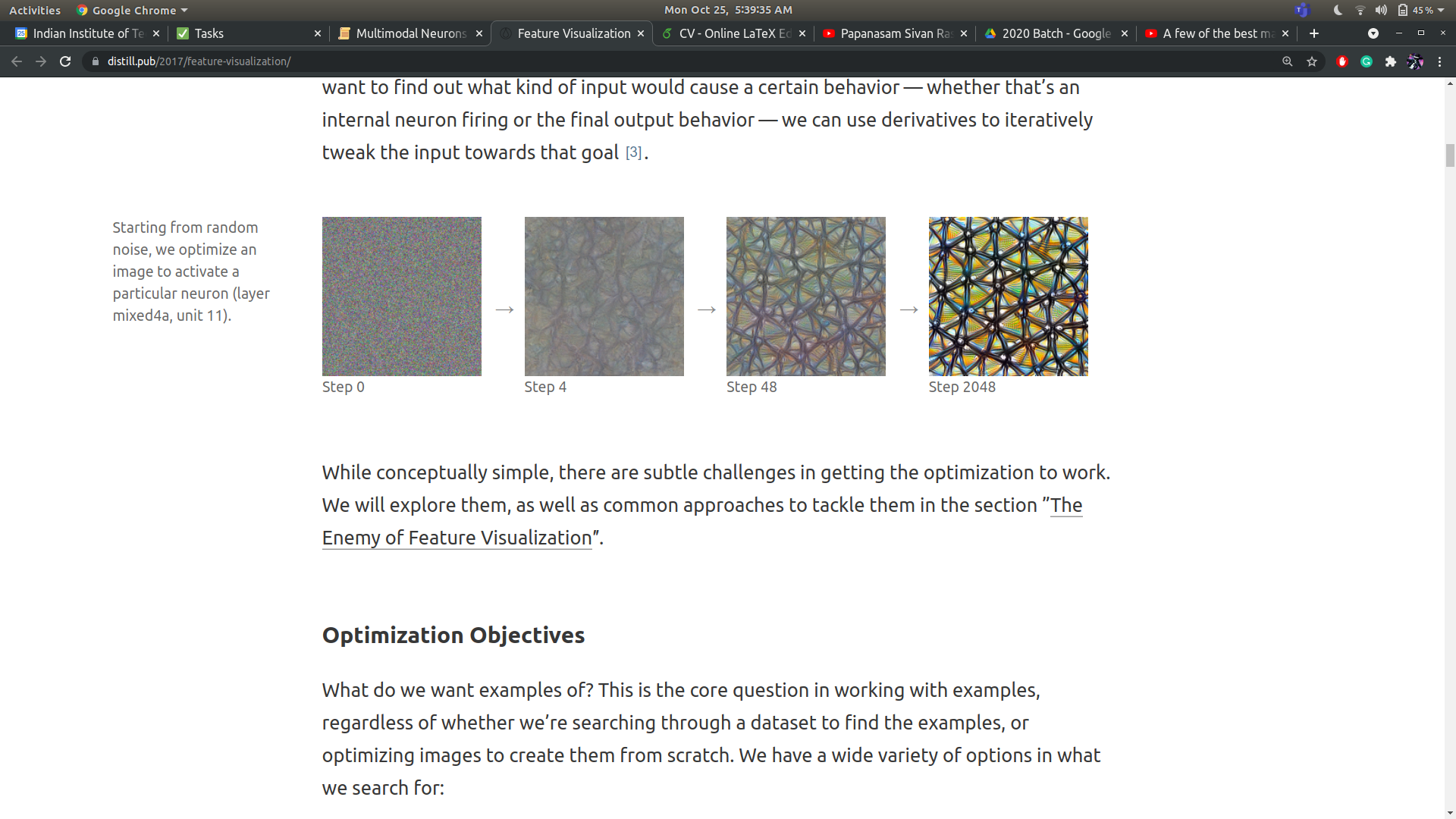

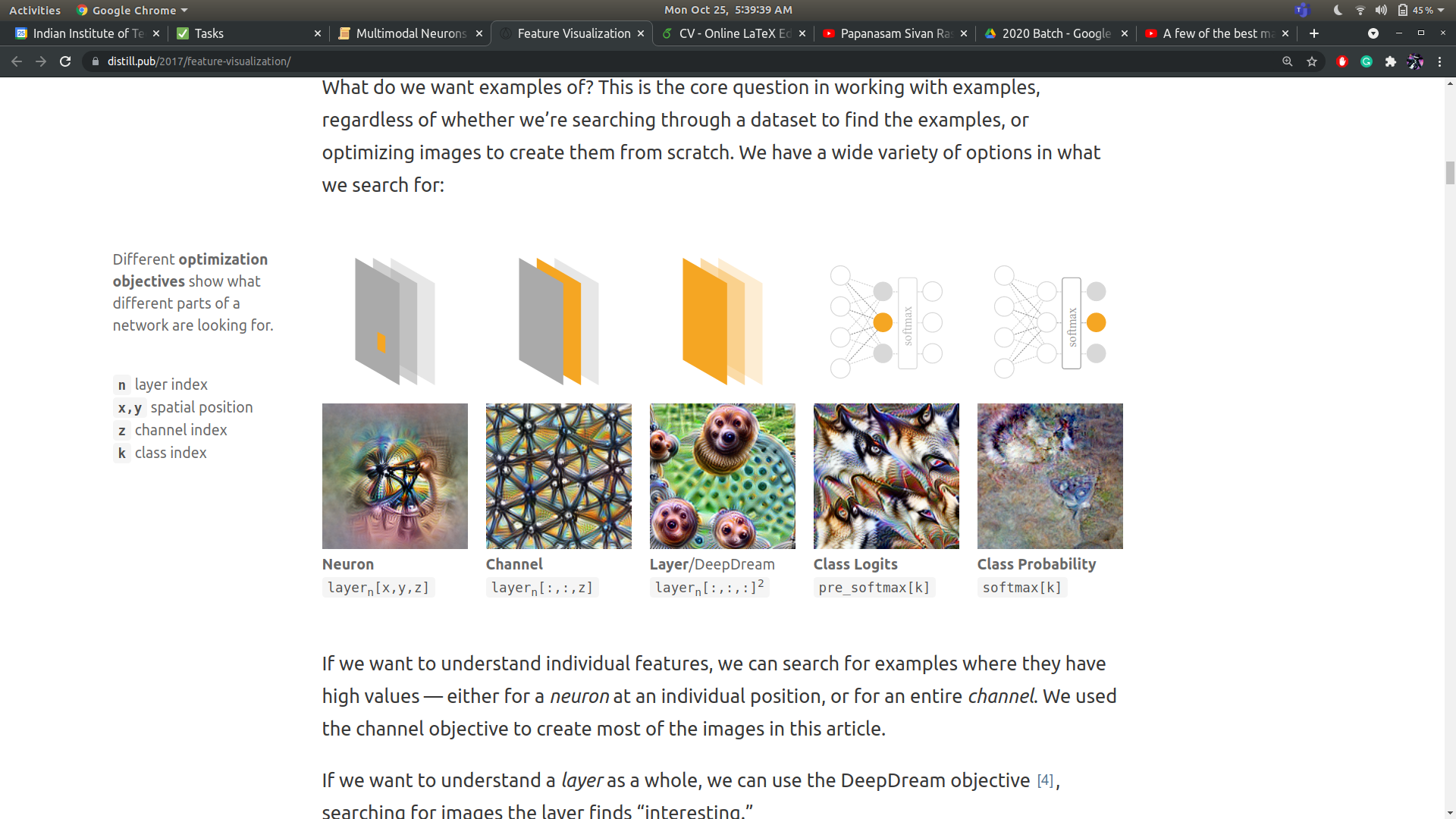

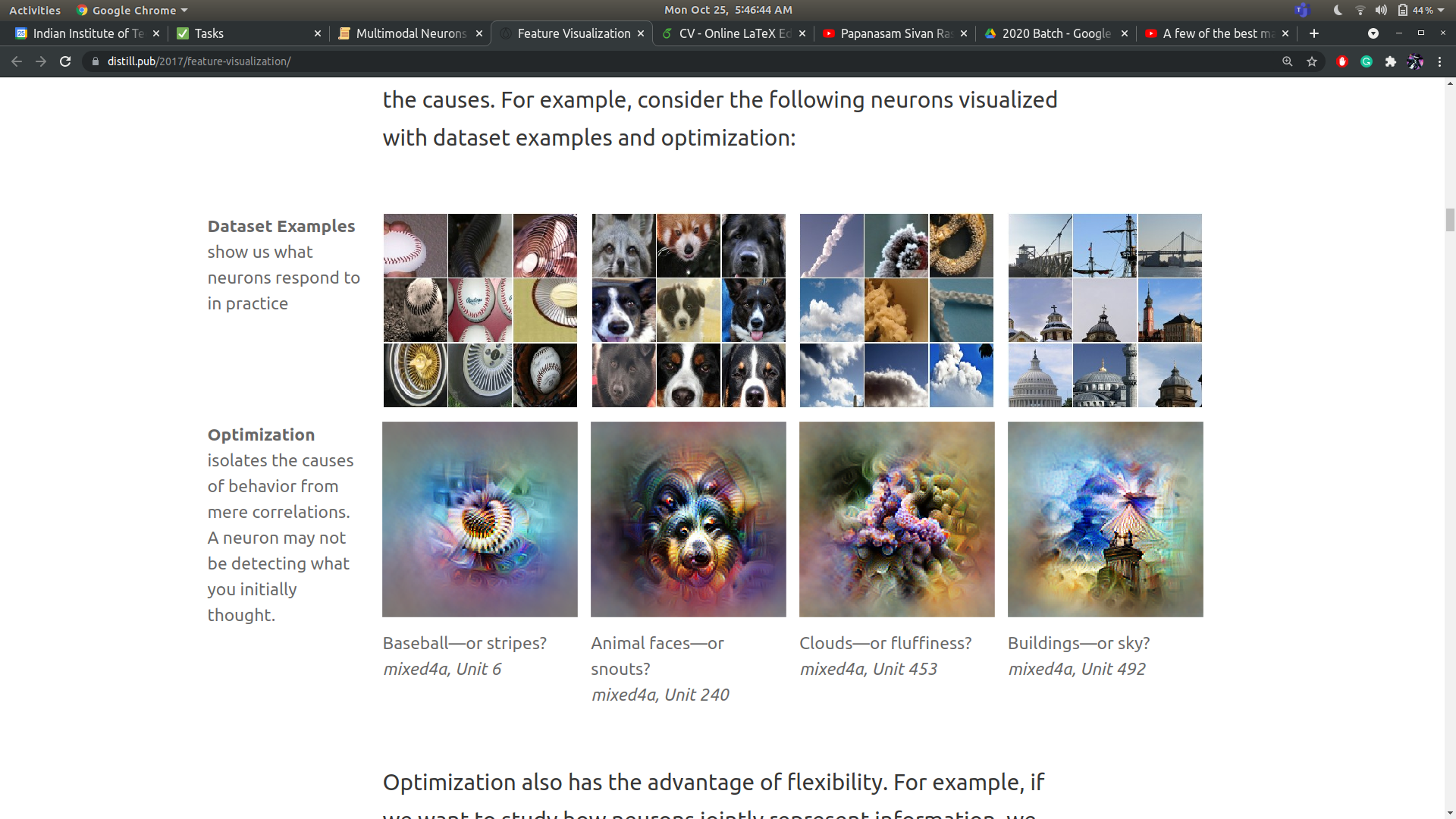

feature visualization

[paper]

feature visualization

[paper]

feature visualization

[paper]

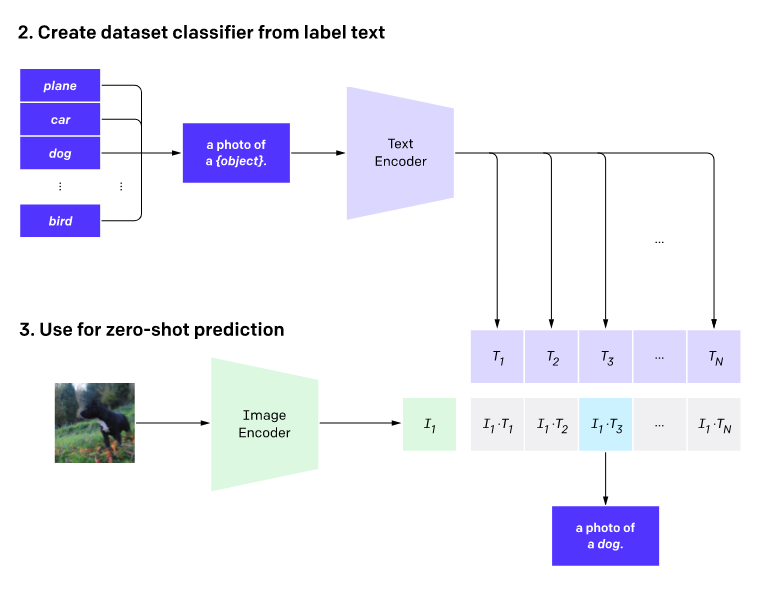

A primer on clip

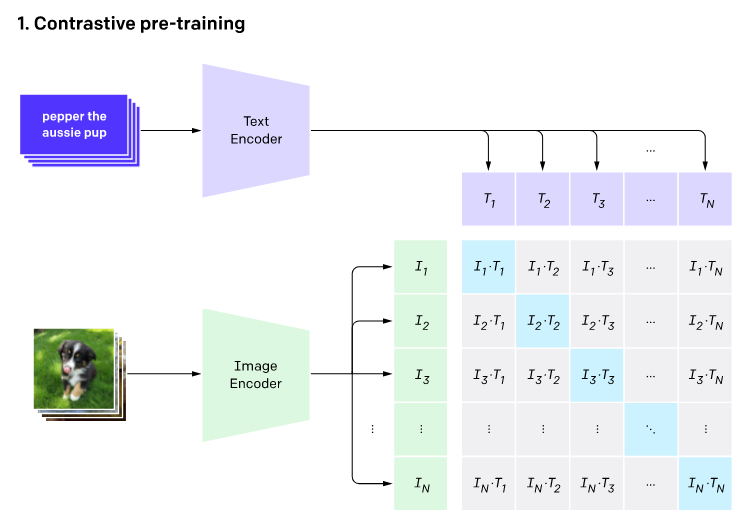

CLIP: Contrastive Language-Image Pretraining

A primer on clip

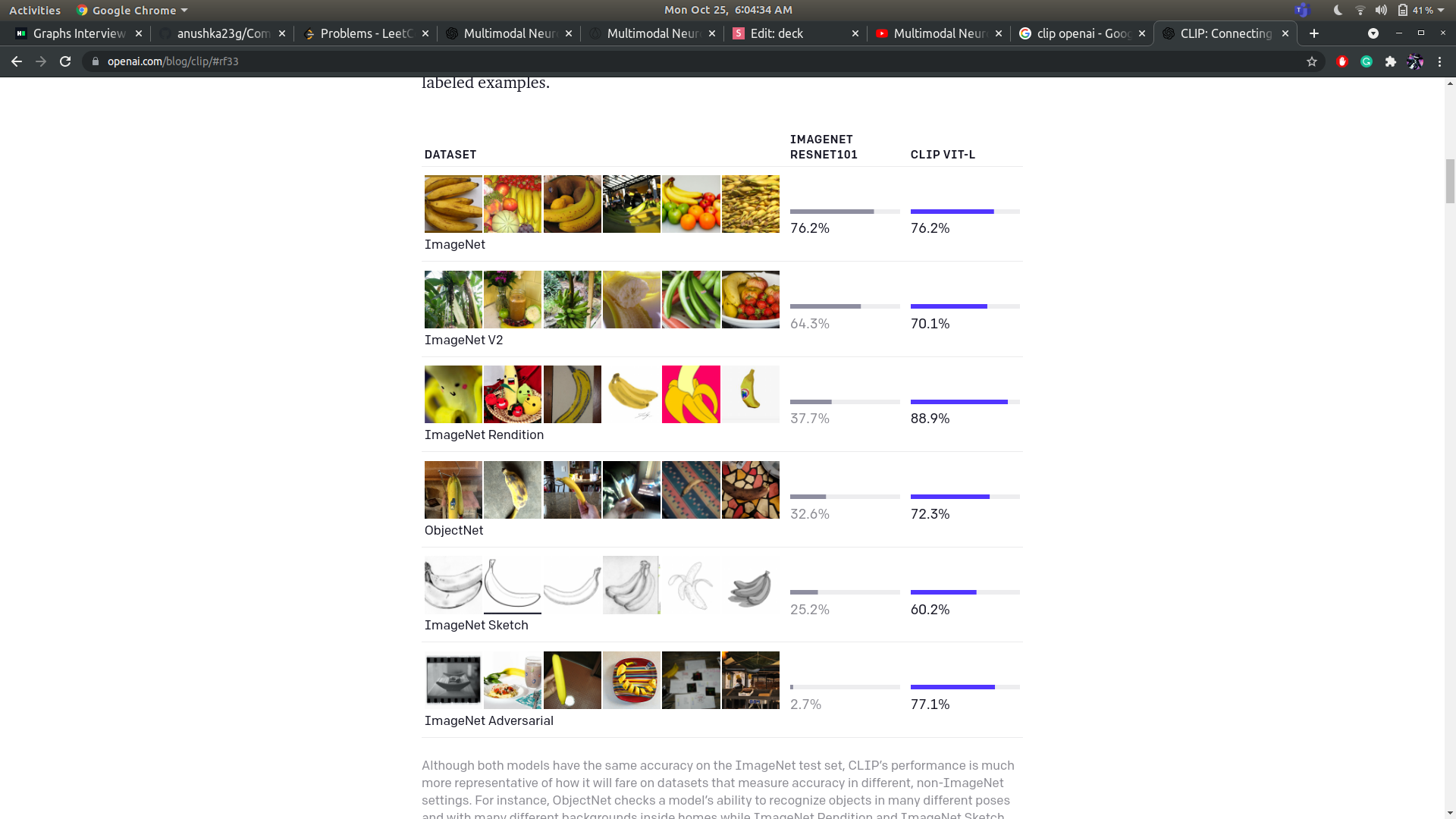

- Model(s) trained to predict image-text similarity

- Trained on huge amounts of data (400M pairs)

- Gets impressive zero-shot performance on a diverse range of benchmarks

- Self-supervised \(\rightarrow\) no need for labels

Outline

- Motivation & Results

- Background

- Feature Visualization

- A primer on CLIP

-

Multimodal Neurons

- Faceted visualization

- Person Neurons

- Region Neurons

- Typographic Attacks

- Conclusion

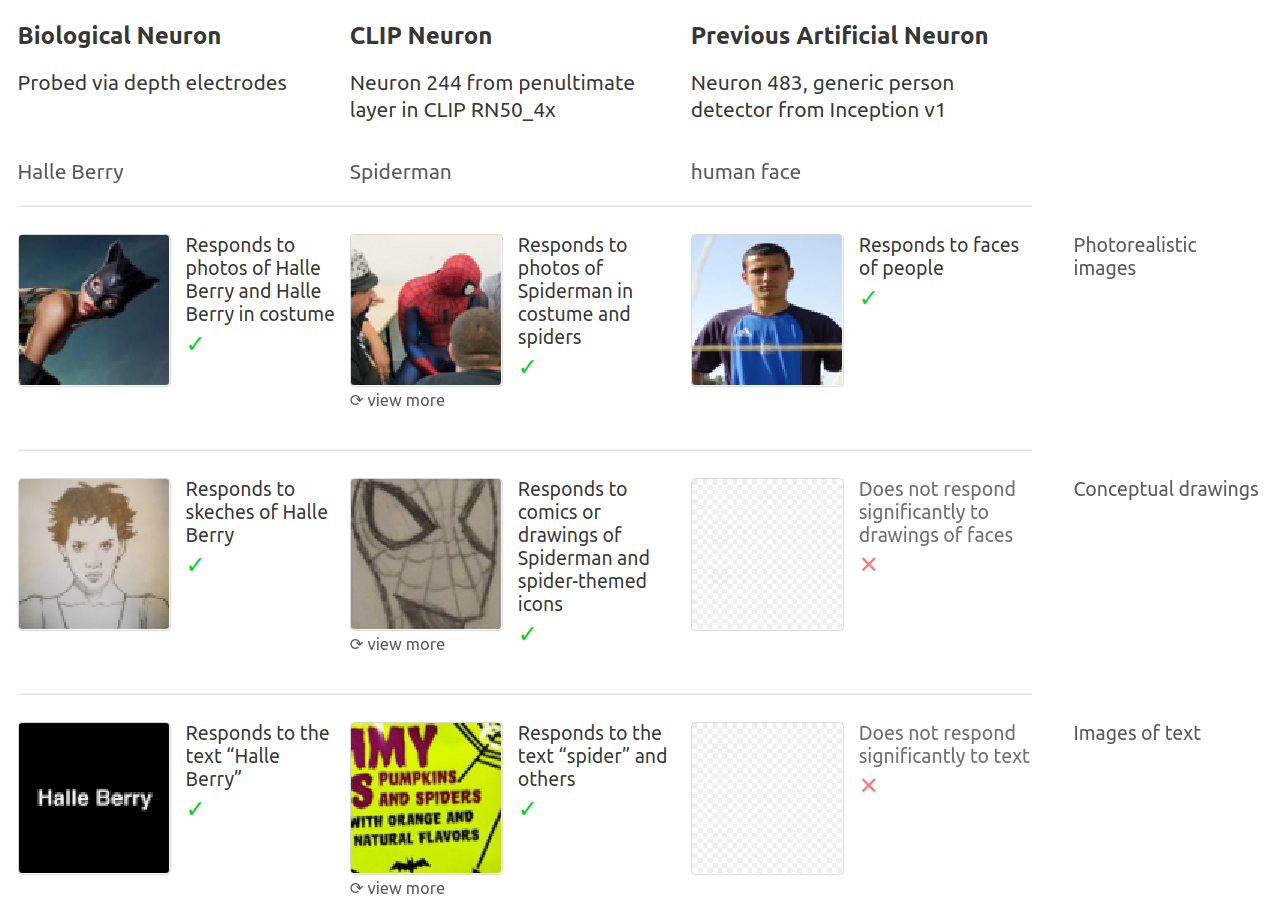

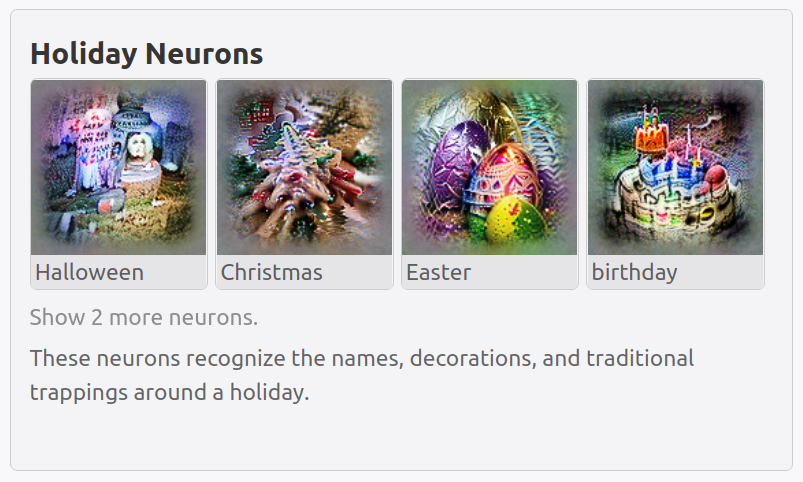

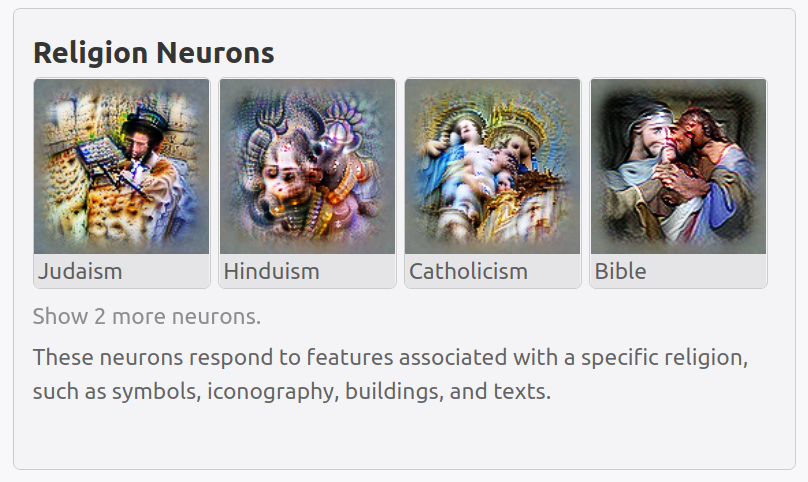

MULtimodal neurons

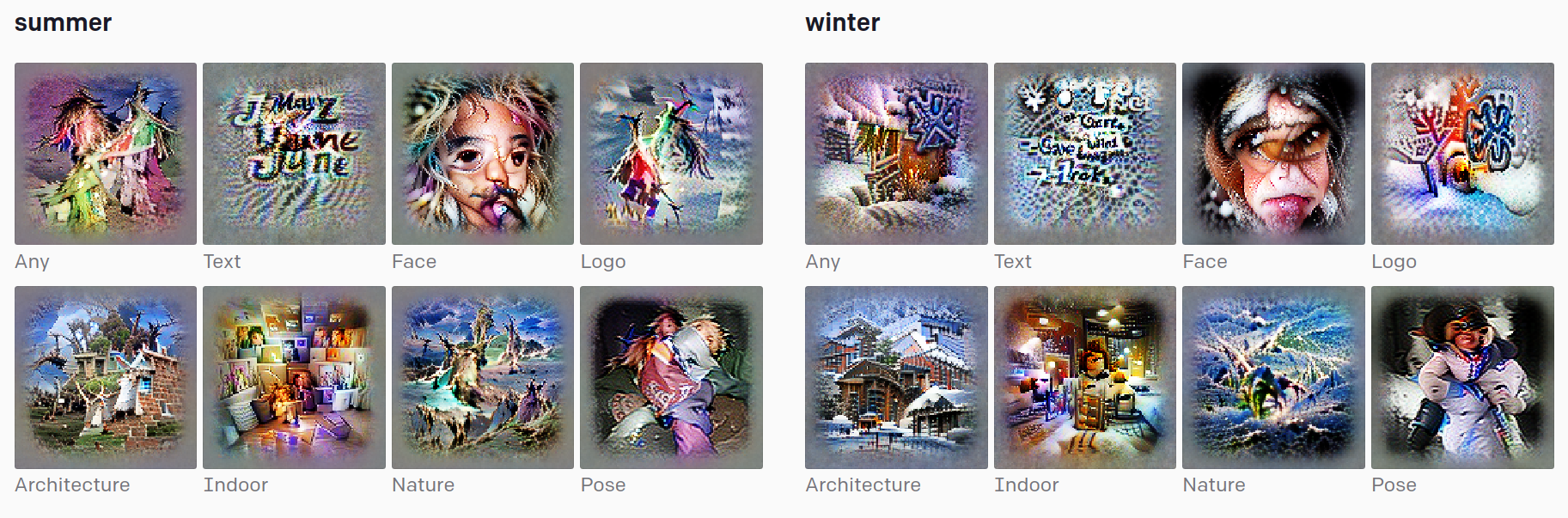

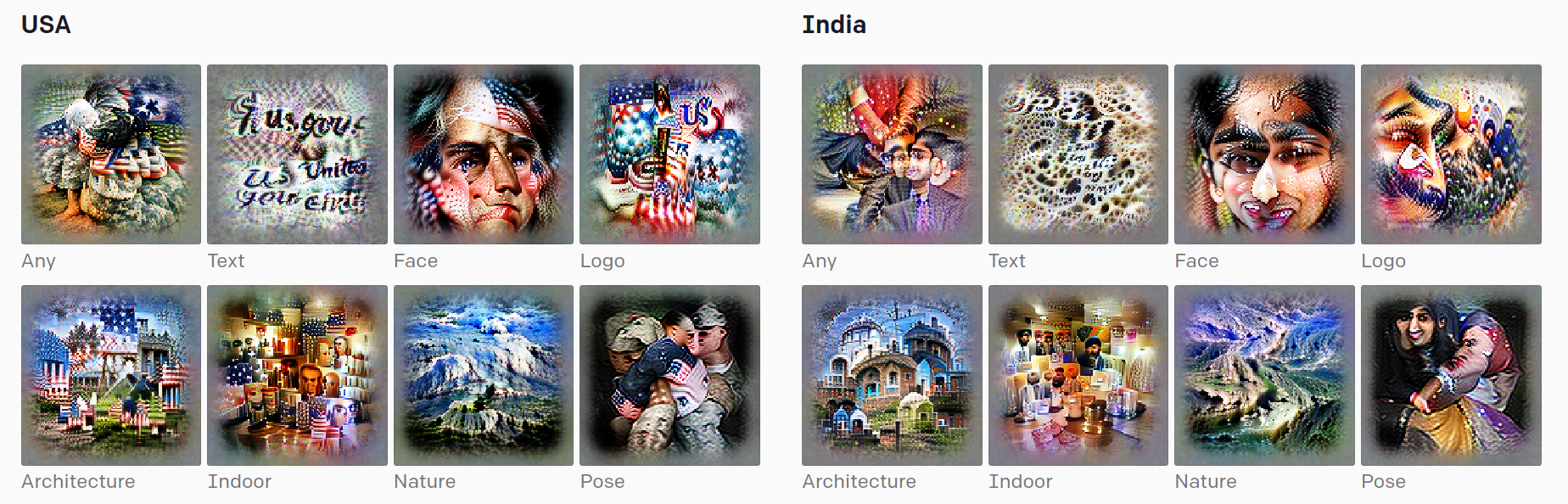

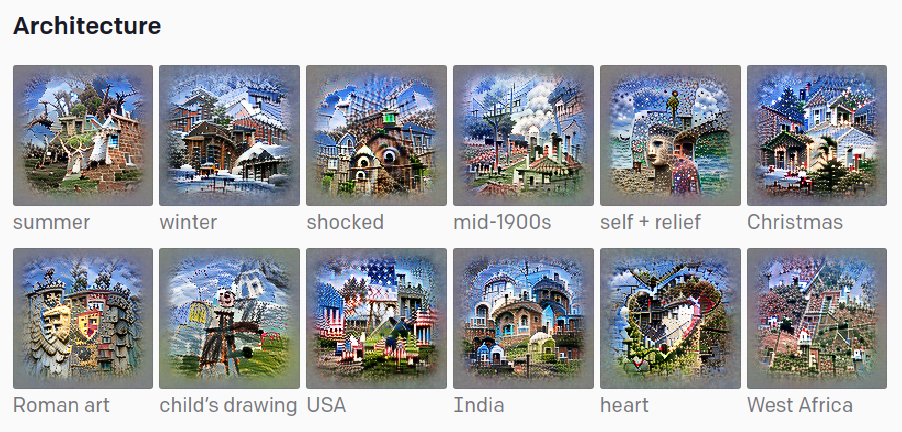

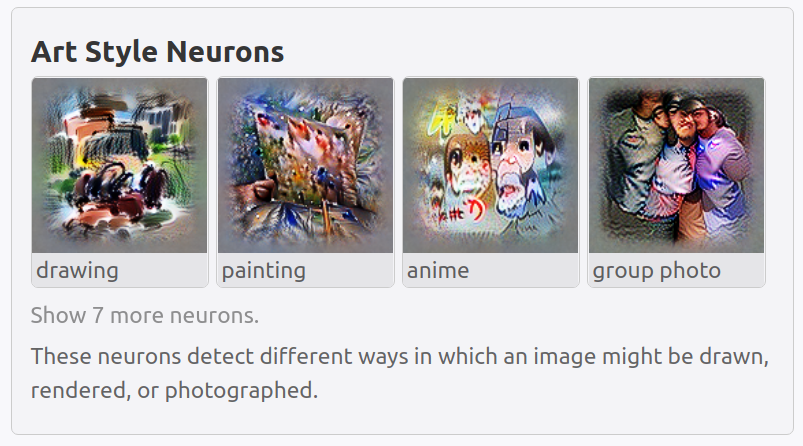

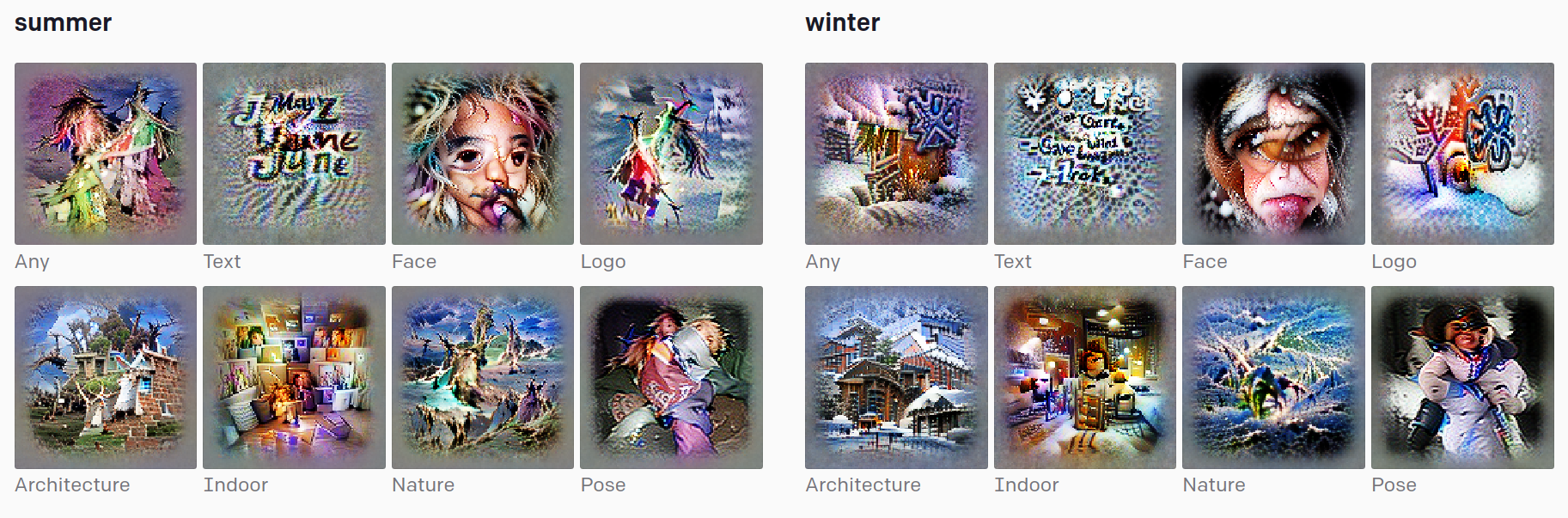

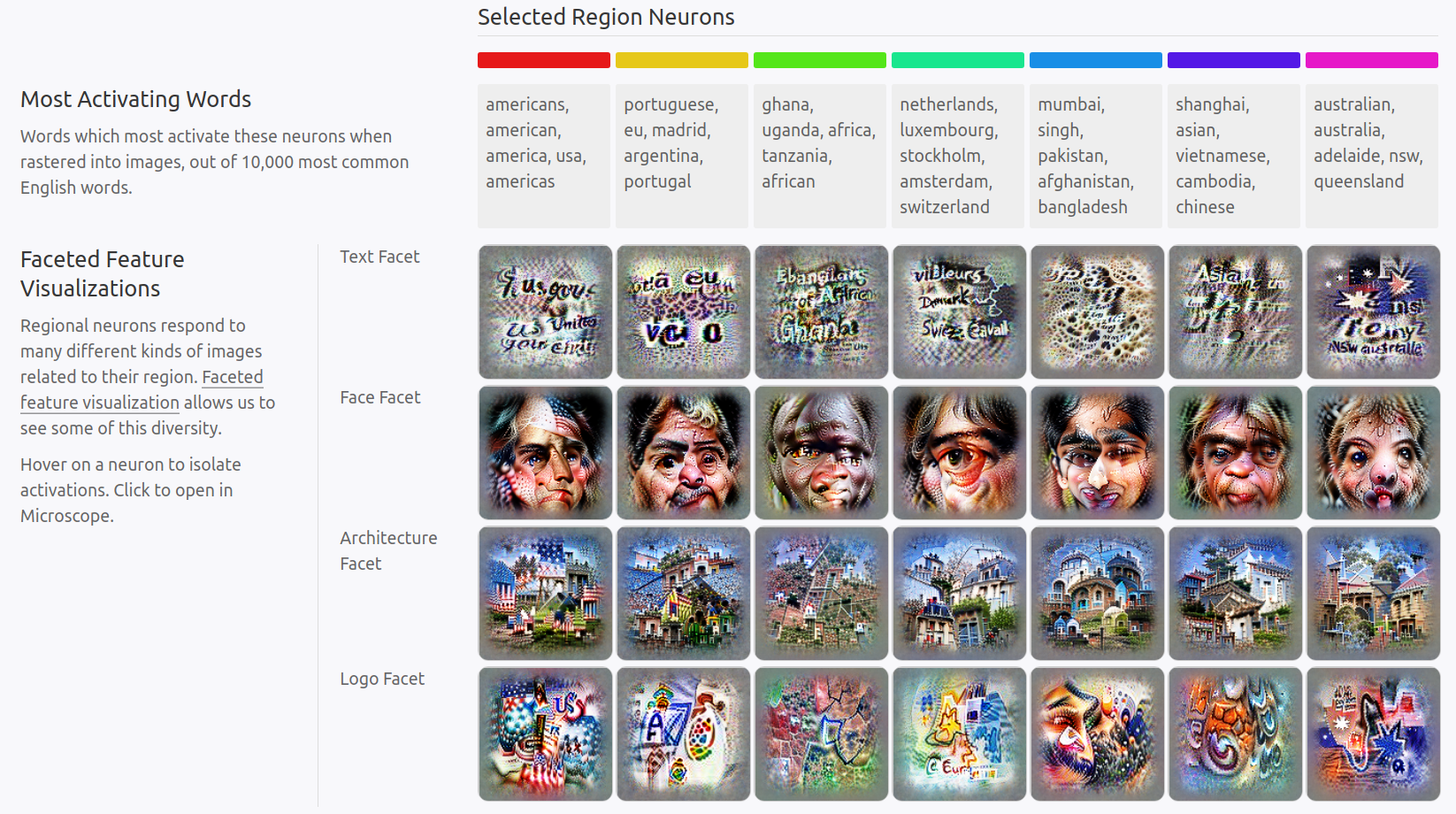

Multi-faceted feature visualization

- Neurons often fire for multiple "facets"

- Eg: a grocery store neuron: storefront as well as rows of products

- Feature Visualization fails on such neurons

- Past ideas

- Use diverse seeds in optimization [1]

- Add a diversity term to the loss [2]

- Approach

- Train a linear probe \(W\) to classify images into a facet (eg face, text)

- Add \(W\) to visualization objective

person neurons

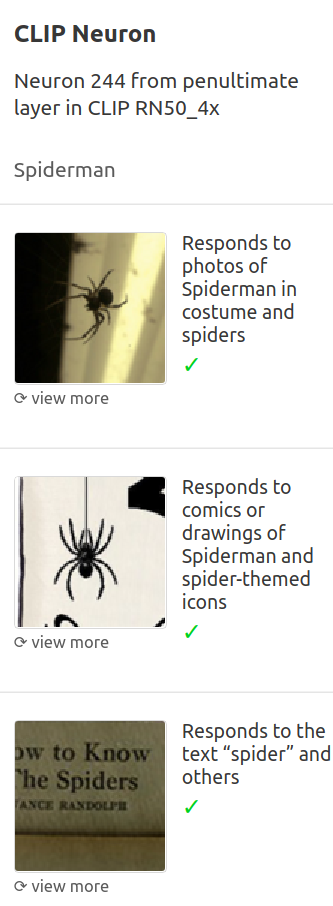

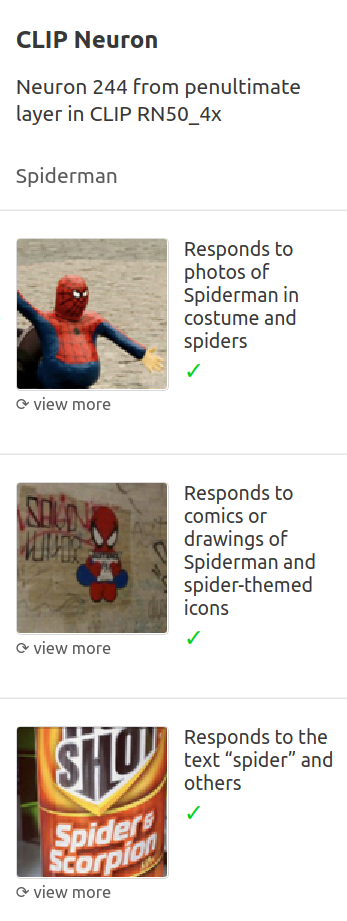

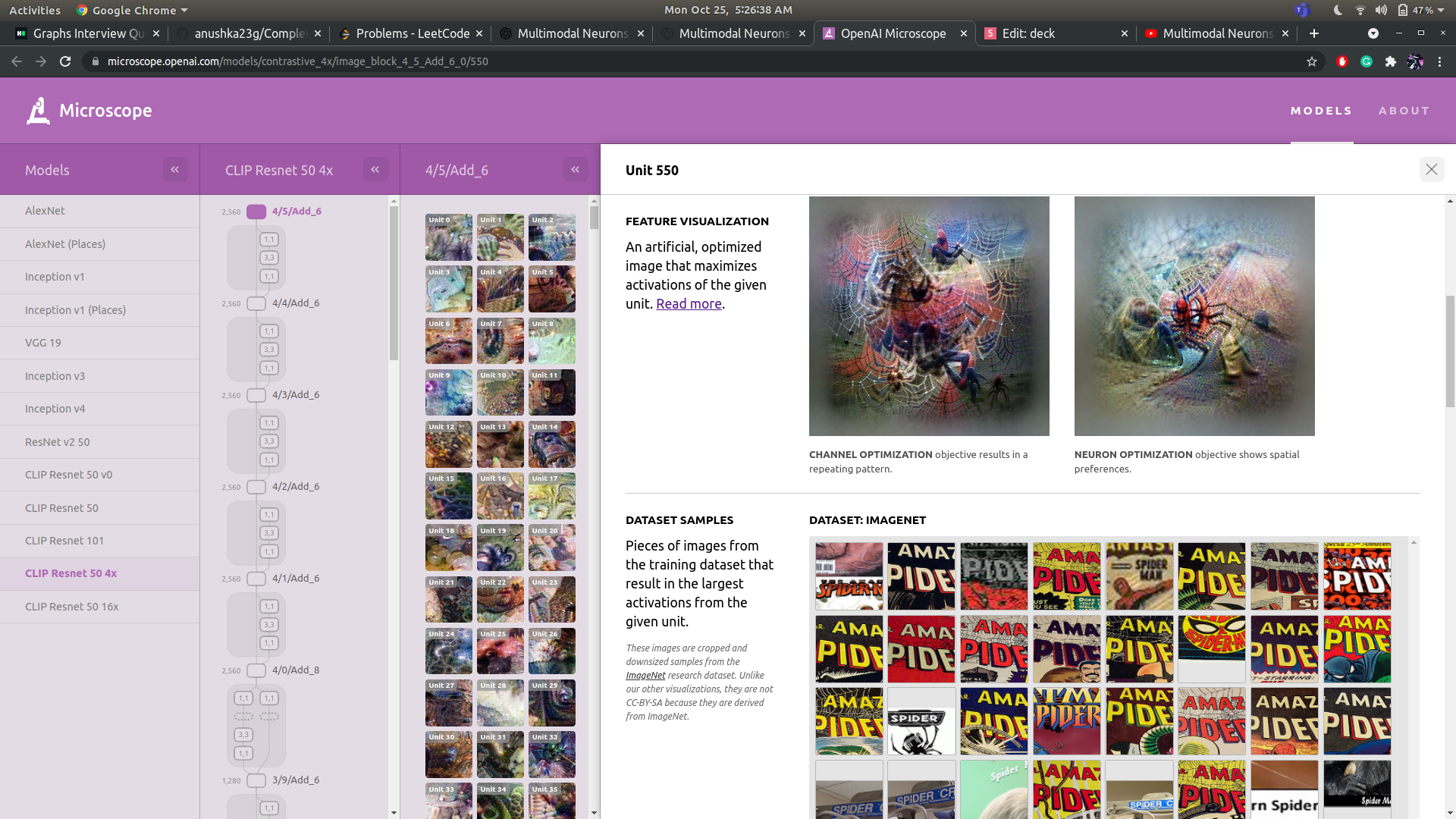

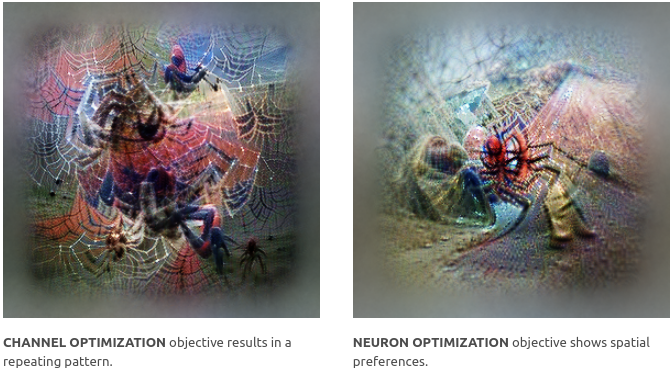

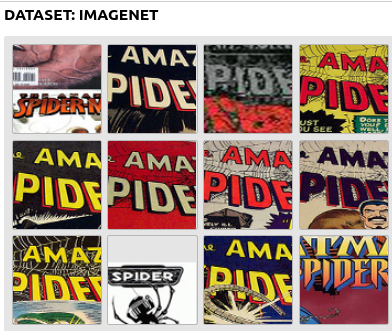

SPIDERMAN NEURON

- Reacts to images, drawings, and renderings of the text 'spiderman' (and 'spider')

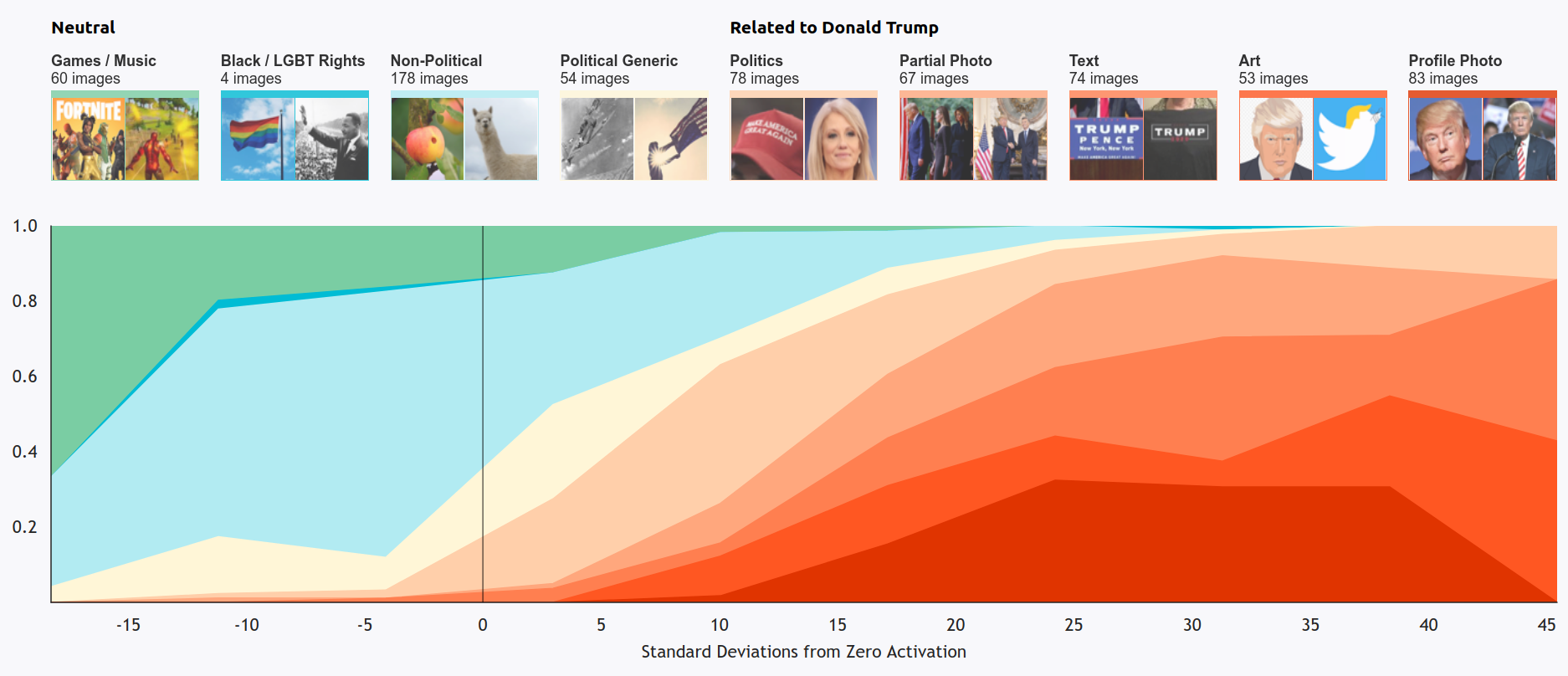

person neurons: DONALD TRUMP NEURON

Text

- Case study: Which dataset pictures activate the neuron and by how much?

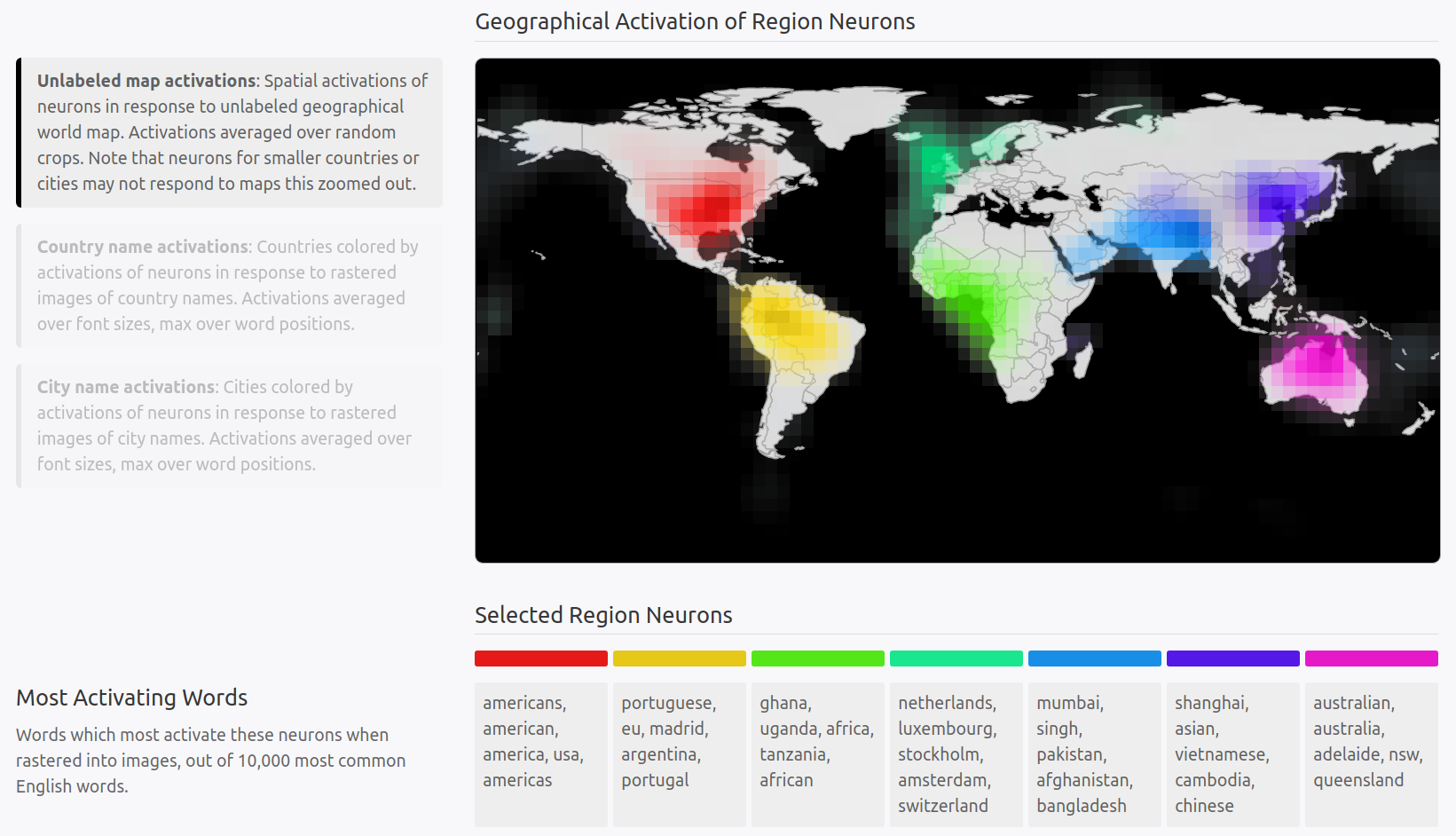

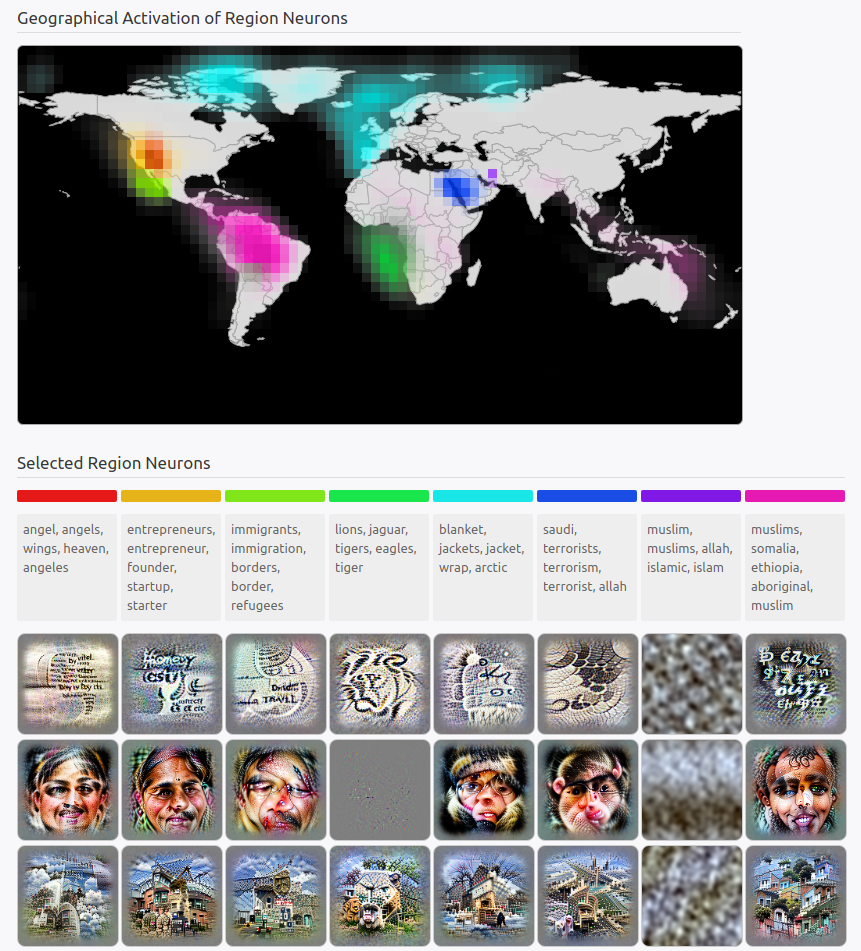

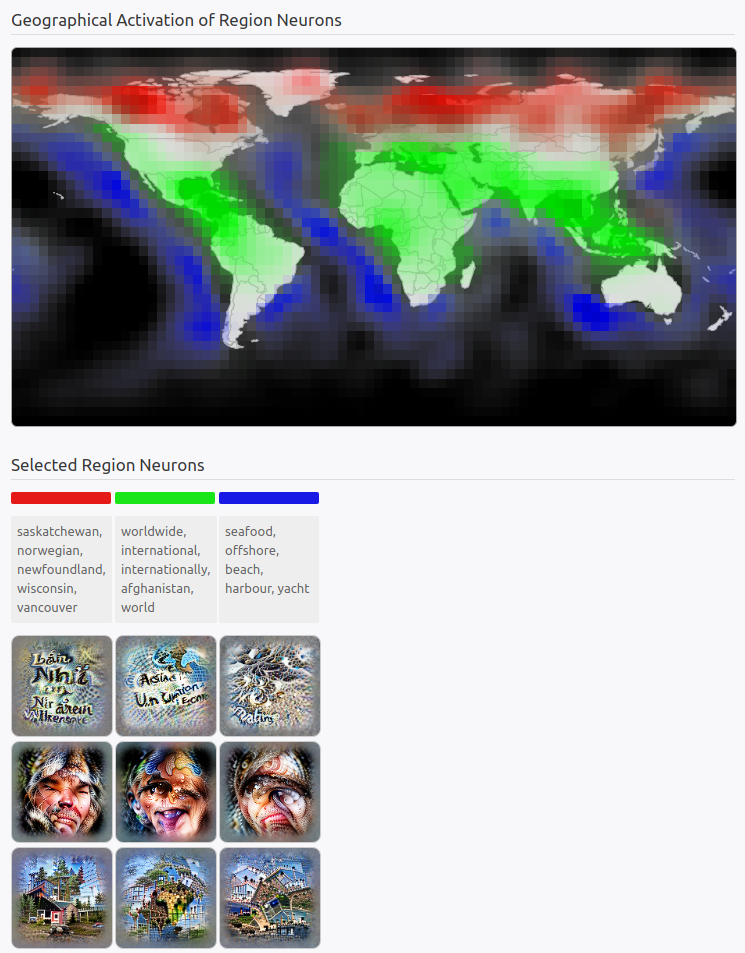

region neurons

region neurons

region neurons

Outline

- Motivation & Results

- Background

- Feature Visualization

- A primer on CLIP

- Multimodal Neurons

- Person Neurons

- Region Neurons

- Typographic Attacks

- Conclusion

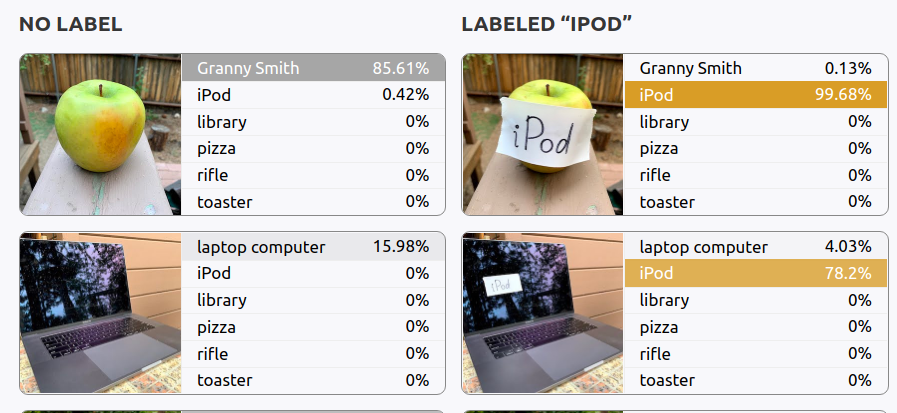

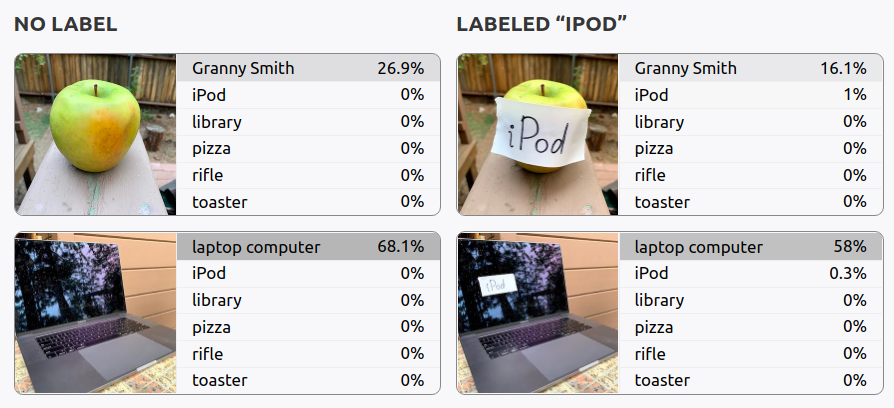

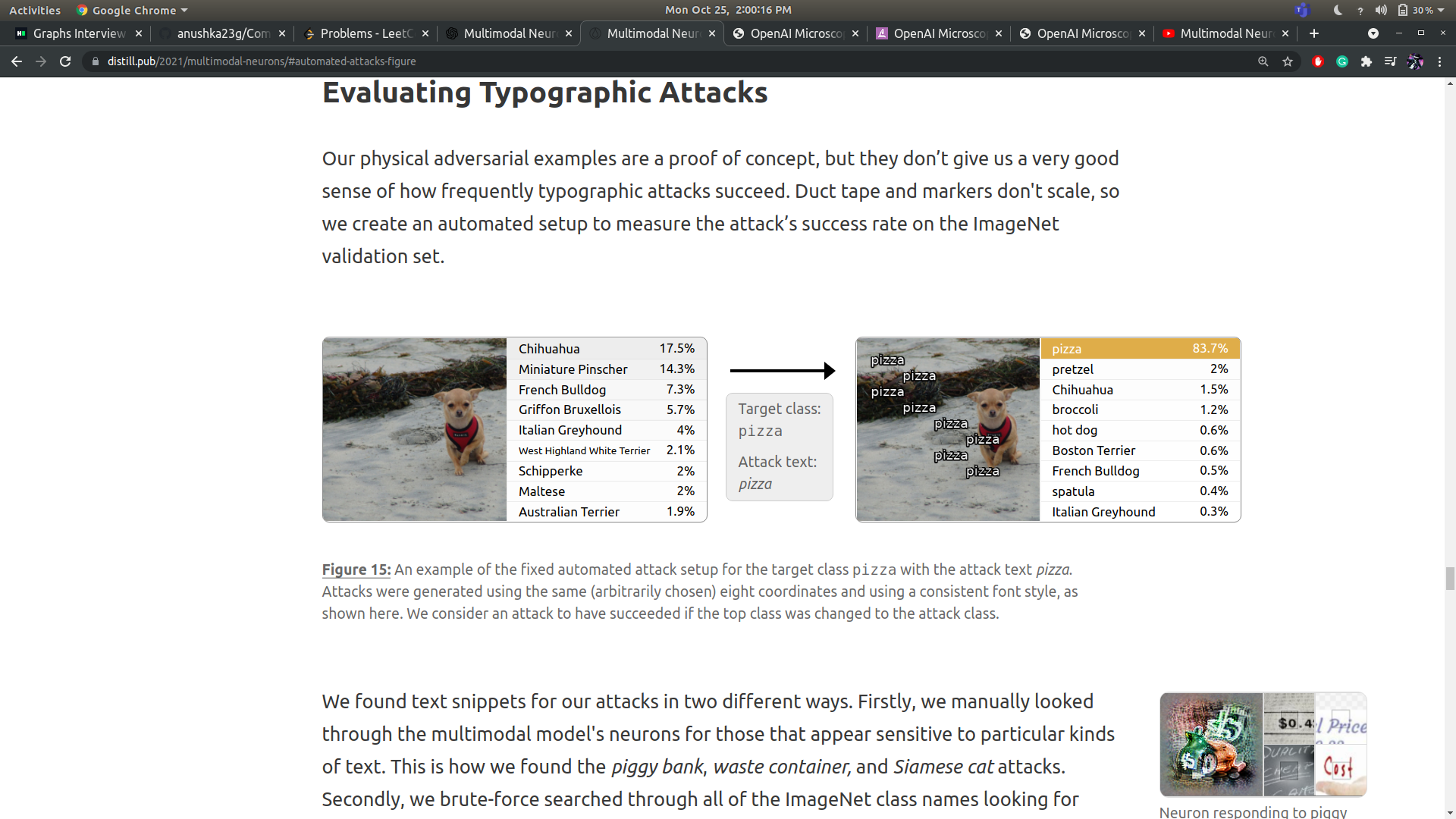

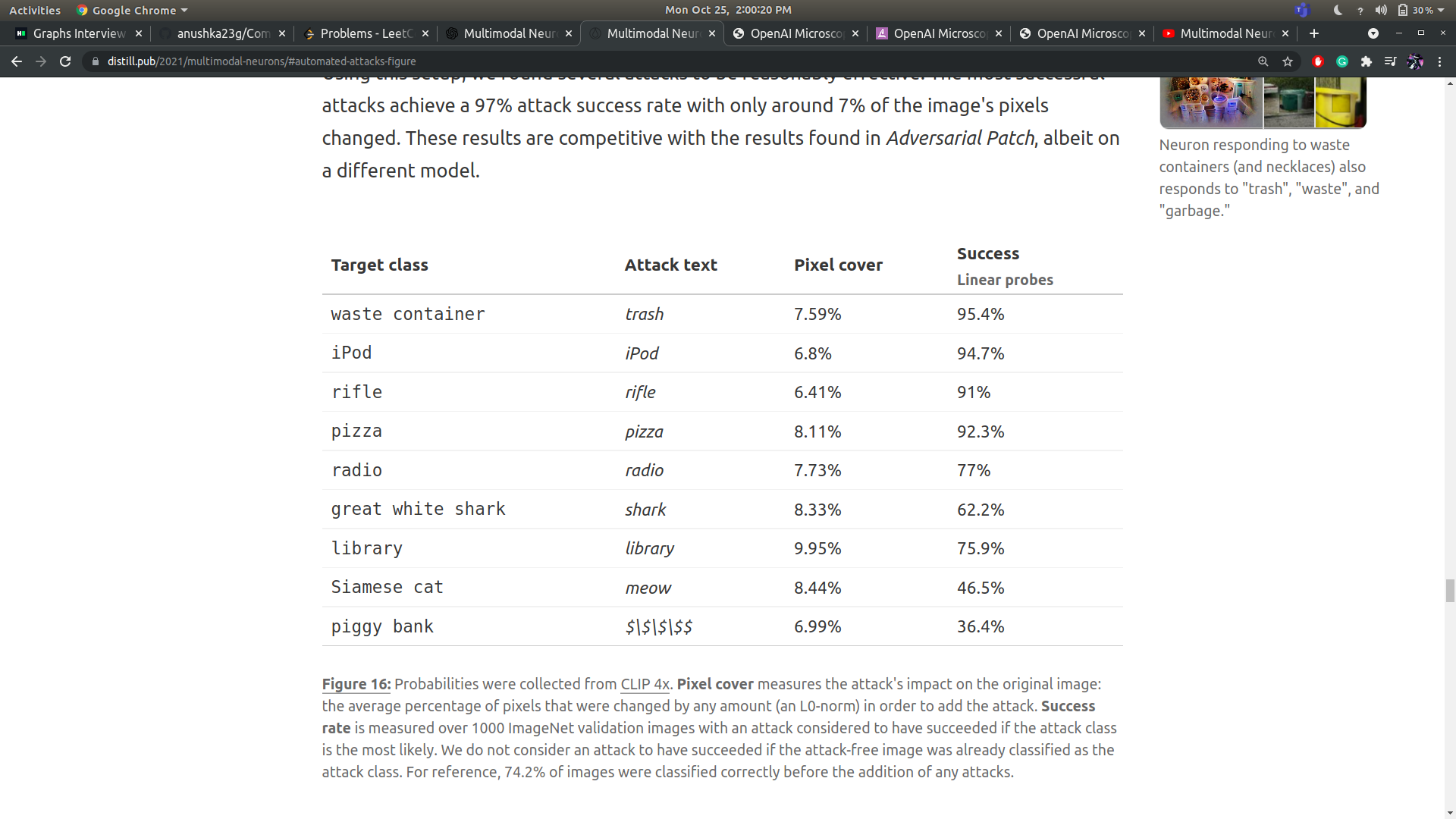

Typographic attacks

Zero-shot

Linear probe

Typographic attacks

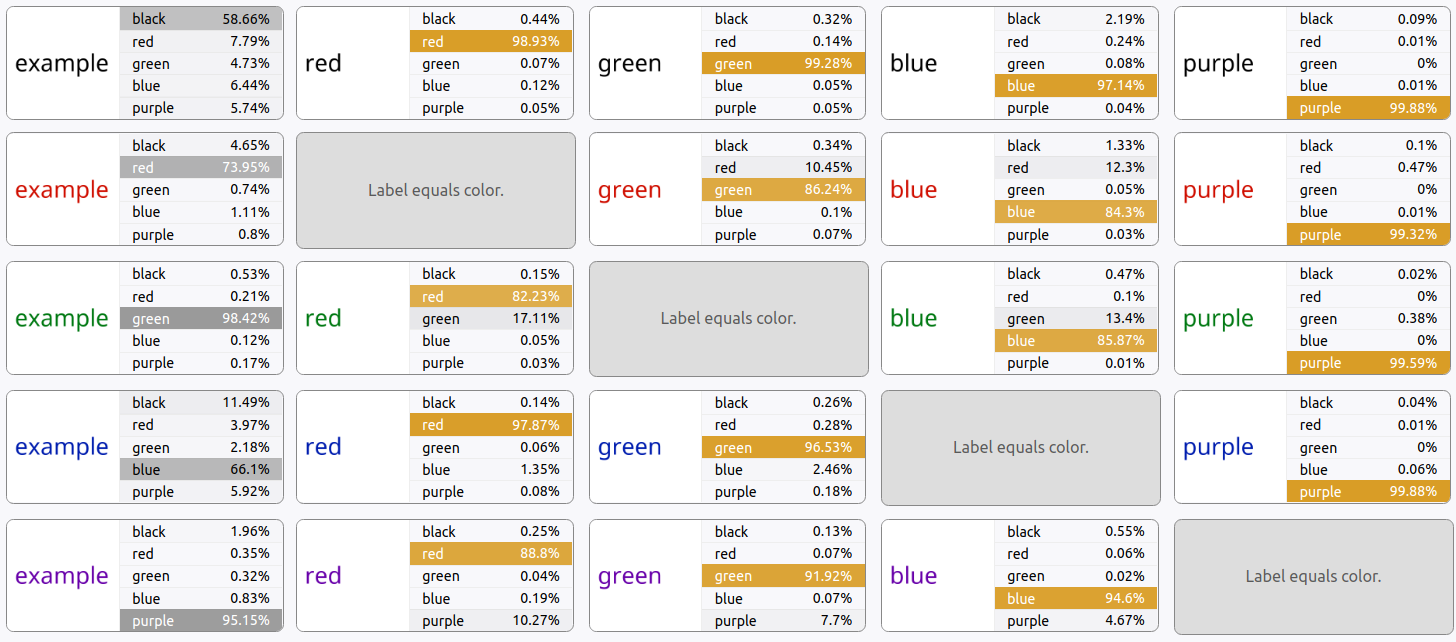

Typographic attacks: the stroop effect

Activations were gathered using the zero-shot methodology with the prompt "my favorite word, written in the color _____".

Conlusion

- Much like biological neurons, CLIP seems to have multimodal neurons

- Feature Visualization and Dataset Search are powerful tools to visualize NNs

- One can examine families (region, person, emotion) of these neurons

- CLIP is prone to in-the-wild low-tech typographic attacks

- Bias

Resources

- The paper itself

- The accompanying OpenAI blog post

- OpenAI Microscope

- Yannic Kilcher's video